Achieving Trajectory Stitching Using Non-Stitching Models

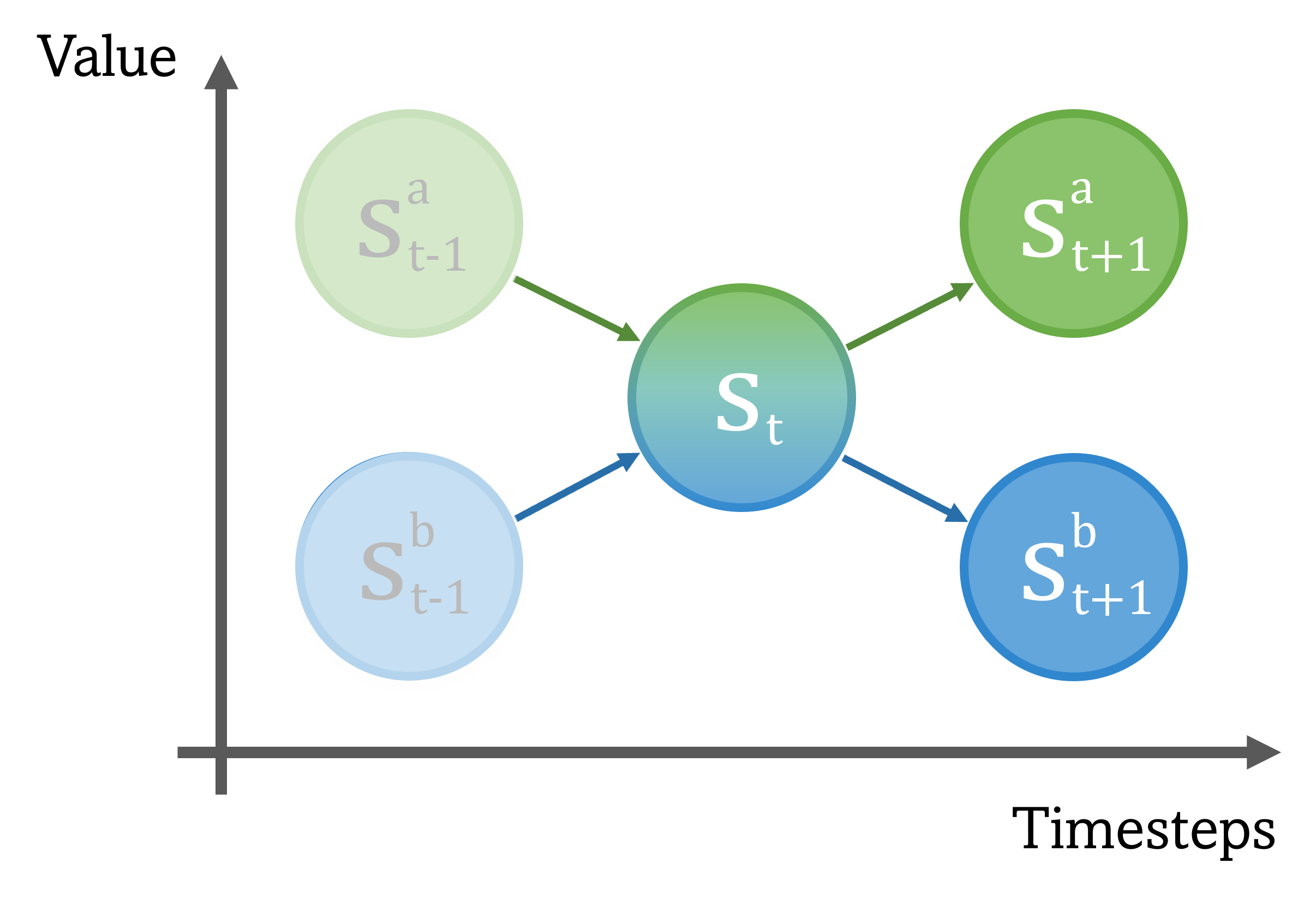

We show that achieving trajectory stitching is possible without explicitly training Decision Transformer (DT) to stitch. A key insight is that DT can be utilized to stitch by adjusting the history length maintained in DT. Given two trajectories \(s^a, s^b\) in a datasets, a non-stitching model starting from \( s_{t-1}^b \) may end up with a sub-optimal state \( s_{t+1}^b \) as in the dataset. However, if we are able to adjust the history length to 1 at \( s_t \), the model will be able to stitch with \( s_{t+1}^a \) and generate a more optimal trajectory since there exists \( s_t\rightarrow s_{t+1}^a\) in the dataset.

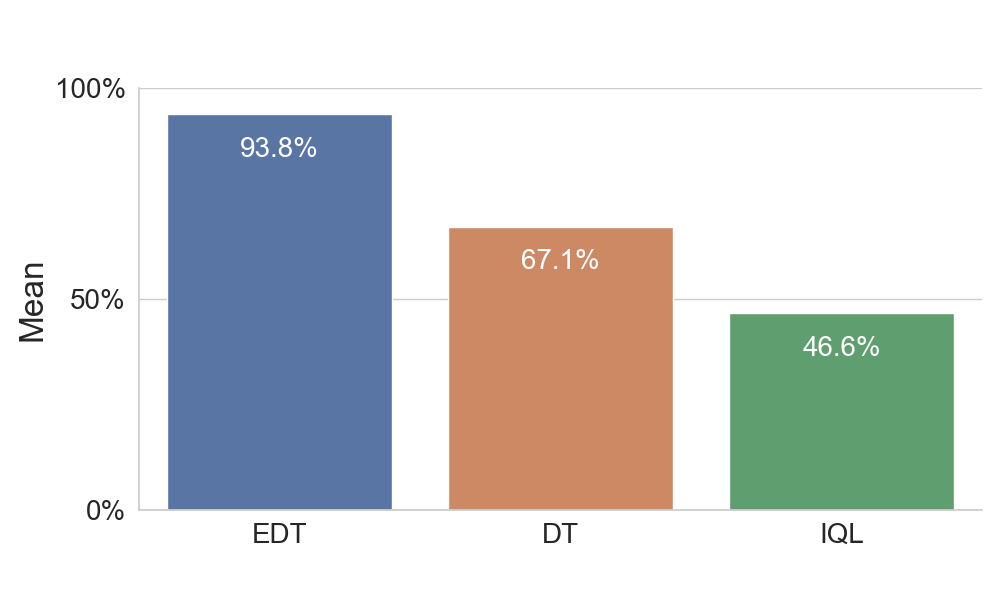

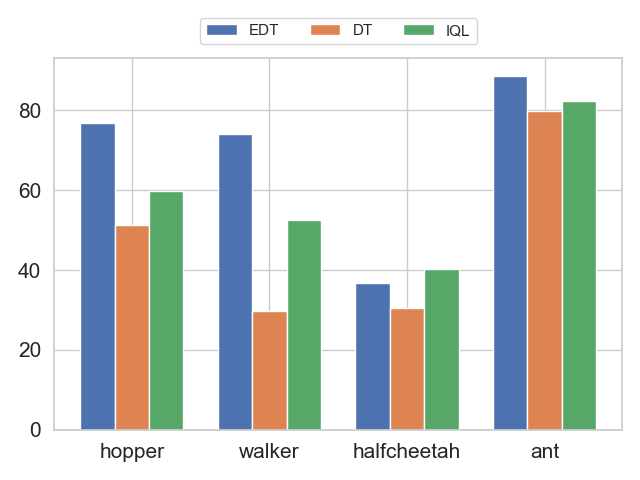

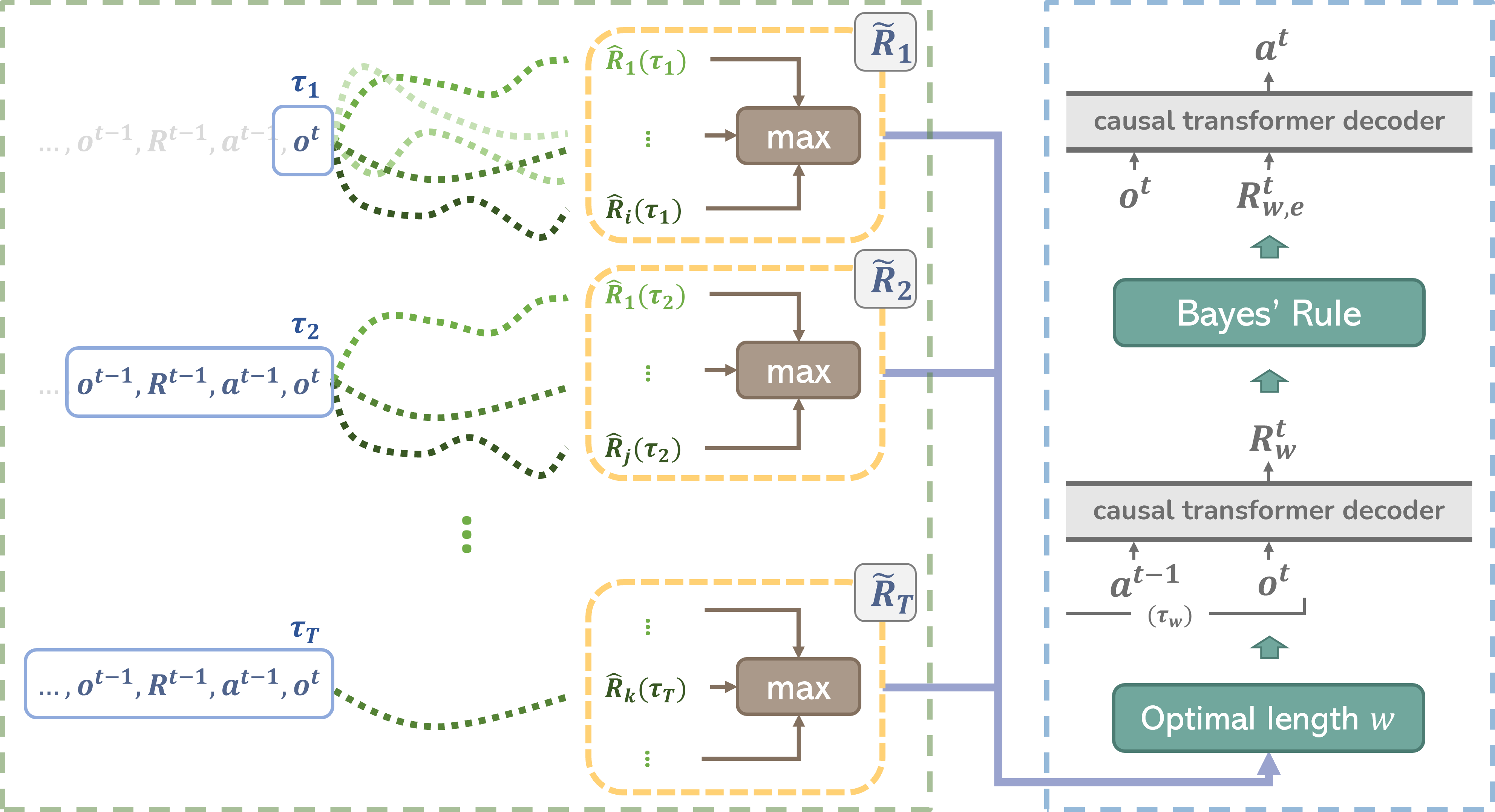

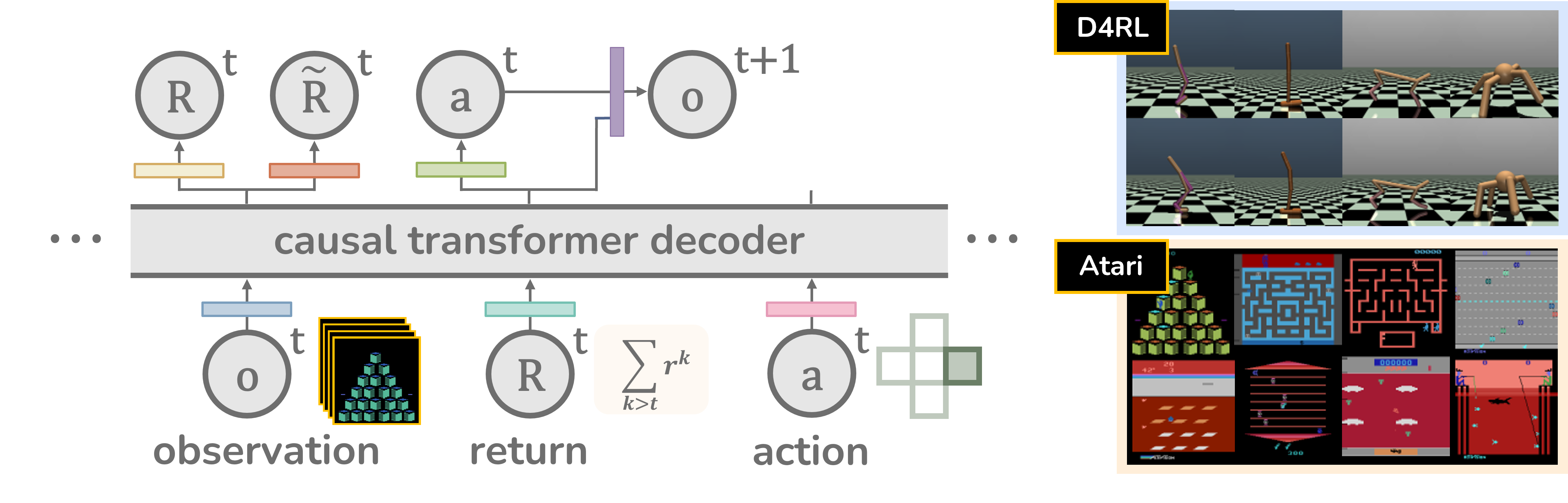

We propose an architecture that approximate the maximum value of in-support return \( \tilde{R} \) with expectile regression for different history lengths. Estimating this value allows us to find the optimal history length for stitching. We show that the proposed method outperforms DT and its variants in a multi-task regime on the D4RL locomotion benchmark and Atari games.